用Twitter的cursor方式进行Web数据分页

本文讨论Web应用中实现数据分页功能,不同的技术实现方式的性能方区别。

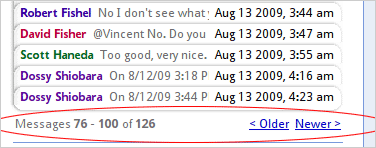

上图功能的技术实现方法拿MySQL来举例就是

select * from msgs where thread_id = ? limit page * count, count

不过在看Twitter API的时候,我们却发现不少接口使用cursor的方法,而不用page, count这样直观的形式,如 followers ids 接口

URL:

http://twitter.com/followers/ids.format

Returns an array of numeric IDs for every user following the specified user.

Parameters:

* cursor. Required. Breaks the results into pages. Provide a value of -1 to begin paging. Provide values as returned to in the response body’s next_cursor and previous_cursor attributes to page back and forth in the list.

o Example: http://twitter.com/followers/ids/barackobama.xml?cursor=-1

o Example: http://twitter.com/followers/ids/barackobama.xml?cursor=-1300794057949944903

从上面描述可以看到,http://twitter.com/followers/ids.xml 这个调用需要传cursor参数来进行分页,而不是传统的 url?page=n&count=n的形式。这样做有什么优点呢?是否让每个cursor保持一个当时数据集的镜像?防止由于结果集实时改变而产生查询结果有重复内容?

在Google Groups这篇Cursor Expiration讨论中Twitter的架构师John Kalucki提到

A cursor is an opaque deletion-tolerant index into a Btree keyed by source

userid and modification time. It brings you to a point in time in the

reverse chron sorted list. So, since you can’t change the past, other than

erasing it, it’s effectively stable. (Modifications bubble to the top.) But

you have to deal with additions at the list head and also block shrinkage

due to deletions, so your blocks begin to overlap quite a bit as the data

ages. (If you cache cursors and read much later, you’ll see the first few

rows of cursor[n+1]’s block as duplicates of the last rows of cursor[n]’s

block. The intersection cardinality is equal to the number of deletions in

cursor[n]’s block). Still, there may be value in caching these cursors and

then heuristically rebalancing them when the overlap proportion crosses some

threshold.

在另外一篇new cursor-based pagination not multithread-friendly中John又提到

The page based approach does not scale with large sets. We can no

longer support this kind of API without throwing a painful number of

503s.

Working with row-counts forces the data store to recount rows in an O

(n^2) manner. Cursors avoid this issue by allowing practically

constant time access to the next block. The cost becomes O(n/

block_size) which, yes, is O(n), but a graceful one given n < 10^7 and

a block_size of 5000. The cursor approach provides a more complete and

consistent result set.

Proportionally, very few users require multiple page fetches with a

page size of 5,000.

Also, scraping the social graph repeatedly at high speed is could

often be considered a low-value, borderline abusive use of the social

graph API.

通过这两段文字我们已经很清楚了,对于大结果集的数据,使用cursor方式的目的主要是为了极大地提高性能。还是拿MySQL为例说明,比如翻页到100,000条时,不用cursor,对应的SQL为

select * from msgs limit 100000, 100

在一个百万记录的表上,第一次执行这条SQL需要5秒以上。

假定我们使用表的主键的值作为cursor_id, 使用cursor分页方式对应的SQL可以优化为

select * from msgs where id > cursor_id limit 100;

同样的表中,通常只需要100ms以下, 效率会提高几十倍。MySQL limit性能差别也可参看我3年前写的一篇不成熟的文章 MySQL LIMIT 的性能问题。

结论

建议Web应用中大数据集翻页可以采用这种cursor方式,不过此方法缺点是翻页时必须连续,不能跳页。

建议继续学习:

- MYSQL分页limit速度太慢优化方法 (阅读:5532)

- 深入理解Linux内存管理机制(一) (阅读:4722)

- Mysql中的分页写法 (阅读:4648)

- 独创比百度、Google分页还强的分页类 (阅读:4621)

- 合理使用MySQL的Limit进行分页 (阅读:3826)

- 高效的MySQL分页 (阅读:3650)

- 交互模式之分页还是加载? (阅读:2867)

扫一扫订阅我的微信号:IT技术博客大学习

- 作者:Tim 来源: Tim[后端技术]

- 标签: cursor 分页 用Twitter

- 发布时间:2010-01-25 14:56:02

-

[1167] WordPress插件开发 -- 在插件使用

[1167] WordPress插件开发 -- 在插件使用 -

[68] 解决 nginx 反向代理网页首尾出现神秘字

[68] 解决 nginx 反向代理网页首尾出现神秘字 -

[46] Java开发岗位面试题归类汇总

[46] Java开发岗位面试题归类汇总 -

[44] web开发设计人员不可不用的在线web工具和

[44] web开发设计人员不可不用的在线web工具和 -

[33] Rax 系列教程(长列表)

[33] Rax 系列教程(长列表) -

[32] 手机产品设计方向

[32] 手机产品设计方向 -

[32] 一句话crontab实现防ssh暴力破解

[32] 一句话crontab实现防ssh暴力破解 -

[29] 程序员疫苗:代码注入

[29] 程序员疫苗:代码注入 -

[28] oracle技术方面的路线

[28] oracle技术方面的路线 -

[28] 如何建立合适的索引?

[28] 如何建立合适的索引?